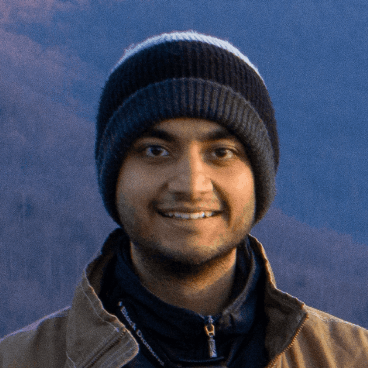

Dr. Aditi Kathpalia

Postdoctoral Researcher

Department of Complex Systems

Institute of Computer Science

Czech Academy of Sciences

Czech Republic

Causality and machine learning

Abstract

Despite the recent success and widespread applications of machine learning (ML) algorithms for classification and prediction in a variety of fields, they face difficulty in interpretability, trustworthiness and generalization. One of the main reasons for this is that these algorithms are building black-box models by learning statistical associations between the given ‘input’ and its ‘output’. Decisions made solely based on ‘associational learning’ are insufficient to provide explanations and hence difficult to be employed in real world tasks requiring transparency and reliability. To overcome these limitations of ML algorithms, researchers are moving towards ‘causal machine learning’ by aiding ML decision-making based on causal reasoning and understanding. We will discuss ‘the science of causality’, its requirements in ML and possible means of integration with ML. We will also compare different ML algorithms based on their performance in learning temporal order/ structure in single time series as well as their ability to classify coupled pairs of time-series based on their cause-effect (or driver-driven) relationship.

Speaker Bio

Aditi Kathpalia is currently a postdoctoral researcher at the Department of Complex Systems, Institute of Computer Science of the Czech Academy of Sciences in Czech Republic. Her research interests include causal inference and causal machine learning, complex systems, information theory and computational neuroscience. She has co-authored 8 international journal publications and presented her work in 16 international conferences & workshops. She completed her PhD in 2021 with the dissertation titled, ‘Theoretical and Experimental Investigations into Causality, its Measures and Applications’, from the National Institute of Advanced Studies (NIAS), IISc Campus, Bengaluru, India. She graduated as a gold medallist with bachelors and masters (dual degree) in the field of Biomedical Engineering from Indian Institute of Technology (BHU), Varanasi, India in the year 2015.

Video of talk

Prof Erik M Bollt

Professor of MathematicsDirector, Clarkson Center for Complex Systems ScienceClarkson UniversityPotsdam, NY, USA

On explaining the surprising success of reservoir computing forecaster of chaos?

Abstract

Machine learning has become a widely popular and successful paradigm, especially in data-driven science and engineering. A major application problem is data-driven forecasting of future states from a complex dynamical system. Artificial neural networks have evolved as a clear leader among many machine learning approaches, and recurrent neural networks are considered to be particularly well suited for forecasting dynamical systems. In this setting, the echo-state networks or reservoir computers (RCs) have emerged for their simplicity and computational complexity advantages. Instead of a fully trained network, an RC trains only readout weights by a simple, efficient least squares method. What is perhaps quite surprising is that nonetheless, an RC succeeds in making high quality forecasts, competitively with more intensively trained methods, even if not the leader. There remains an unanswered question as to why and how an RC works at all despite randomly selected weights. To this end, this work analyzes a further simplified RC, where the internal activation function is an identity function. Our simplification is not presented for the sake of tuning or improving an RC, but rather for the sake of analysis of what we take to be the surprise being not that it does not work better, but that such random methods work at all. We explicitly connect the RC with linear activation and linear readout to well developed time-series literature on vector autoregressive (VAR) averages that includes theorems on representability through the Wold theorem, which already performs reasonably for short-term forecasts. In the case of a linear activation and now popular quadratic readout RC, we explicitly connect to a nonlinear VAR, which performs quite well. Furthermore, we associate this paradigm to the now widely popular dynamic mode decomposition; thus, these three are in a sense different faces of the same thing. We illustrate our observations in terms of popular benchmark examples including Mackey–Glass differential delay equations and the Lorenz63 system.

Speaker Bio

Prof Erik Bollt is the W. Jon Harrington Prof of Mathematics at Clarkson University and is the Director of the Clarkson Center for Complex Systems Science. He did his Ph D from the University of Colorado with Prof. Jim Meiss. His research interests lie in the data driven analysis of complex systems and dynamical systems, machine learning and data science methods, and in network science.

Video of talk